In 2026, accountability stops being a shield. It becomes a spotlight – and it’s pointed at you.

For years, compliance has been treated as a process. A set of controls. A box to tick. A department to manage risk on behalf of the business. That mindset is outdated.

2026 marks a deeper shift: accountability is no longer something organisations have. It is something leaders carry. Not symbolically. Not rhetorically. But in a very real sense.

One of the big factors triggering this change is the widespread adoption of generative AI. Not because AI created problems, but rather because it exposed them. As AI systems automate decisions and amplify them at scale, they shine a light on long-standing governance gaps around access, ownership and decision logic. At the same time, incoming EU regulation like NIS2 and the Cyber Resilience Act signal a clear direction of travel: accountability is moving away from procedural box-ticking and toward identifiable human responsibility.

The ongoing UBS and Credit Suisse case is an early public signal of this shift. In short, Swiss prosecutors have charged UBS over Credit Suisse–era money laundering controls, including the role of a former compliance employee who failed to report suspicious transactions. This shows what happens when historical decisions, opaque governance structures and modern expectations collide. And it raises a broader leadership question: when systems make decisions, who is accountable?

This article explores four signals that are shaping that answer.

Signal 1: Accountability Becomes Personal

The UBS/Credit Suisse case has attracted attention not only because of its legal complexity, but because of where public scrutiny is now focused. The conversation isn’t limited to organisational failure anymore. It has shifted toward individual responsibility inside governance systems.

Swiss business law expert Peter V. Kunz framed this clearly when commenting on the case: the reputational damage of a conviction would outweigh the financial penalty. That distinction matters. It tells us that accountability is becoming reputational, social and personal, not just legal.

This isn’t theoretical. It creates real consequences for your business. As Kunz notes in a recent interview: “The fact that an ordinary employee is now being prosecuted is likely to be a shock for the staff. Be careful; if you make mistakes, you risk lengthy and expensive legal proceedings.”

As a result, pressure increases on those required to sign off on complex, interconnected systems. Professionals working in compliance, risk and governance realise they face more personal responsibility. Decision-making slows as people become more cautious.

This changes the task fundamentally for leaders. Accountability can no longer be reconstructed after the fact. It must be designed into how decisions are made. Ownership needs to be visible, responsibilities explicit, and approval logic clear. And this needs to happen before regulators or the public start asking difficult questions.

The uncomfortable truth is this: if you don’t design accountability intentionally, it will be assigned retroactively, under pressure, and potentially unfairly.

Signal 2: Regulation Moves Faster Than Culture

NIS2 and the Cyber Resilience Act are often discussed as regulatory frameworks. But their deeper meaning is cultural. They reflect societal expectations around resilience, trust and responsibility in a digital economy.

Nowhere is this more visible than in critical sectors such as energy and infrastructure. Here, operational disruption is not just an internal risk. It becomes a public concern. As a result, operational resilience is no longer optional. It is increasingly treated as part of compliance itself.

This creates new realities:

- Audit pressure becomes continuous rather than periodic.

- Supply-chain accountability expands beyond organisational boundaries.

- Resilience becomes a leadership concern, not just an IT responsibility.

The problem is that organisational culture rarely moves at the same speed as regulation. Policies can be updated quickly. Behaviour, incentives and decision habits cannot.

This is where many leadership teams will feel the strain. Doing the minimum to stay compliant is no longer enough. The real challenge is aligning everyday behaviour with the intent of regulation – building trust, strengthening resilience and embedding responsibility across the business.

At its core, this is a shift from obligation to responsibility. From “what do we have to do?” to “who are we as an organisation?”

Signal 3: AI Makes Data Quality a Leadership Issue

The explosion of generative AI tools has introduced a new kind of transparency into governance. Not through visibility, but through consequence.

The chain is simple and unforgiving: Bad access → bad data → unreliable AI → regulatory and reputational risk.

This moves data quality out of the technical domain and into the boardroom. AI projects do not fail because algorithms are weak. They fail because governance foundations are fragile.

This creates a new accountability frontier for you to navigate. Data quality is no longer a back-office task. It becomes a strategic asset tied to decision quality, trust and long-term competitiveness.

There is also a structural risk emerging alongside this shift: dependency. As organisations rush to adopt large language models (LLMs) and cloud-based AI platforms, many are outsourcing not just infrastructure, but control. The recent “AI bubble” news headlines are a warning sign. Investment and excitement are moving fast, but governance is struggling to keep up.

Without control over the data that powers AI systems, organisations risk building their future on platforms they don’t fully own. And as access and pricing become concentrated in the hands of a few dominant providers, the strategic cost of dependency – and the responsibility leaders carry for it – continues to grow.

This is where ownership becomes central. If you don’t protect and govern your own data ecosystems, you risk losing control of the very assets that power growth.

In the AI era, leadership accountability isn’t about picking the right model. It’s about owning the data foundations that make intelligence something you can trust.

Signal 4: Complexity Becomes a Human Risk

Technology allows us to design ever more complex systems and processes. But we rarely stop to ask what this complexity is doing to the people expected to work inside them.

The emerging risk is not primarily malicious behaviour. It is cognitive overload. Good people working inside systems that are too complex to understand, too fragmented to navigate safely, and too opaque to support confident decision-making.

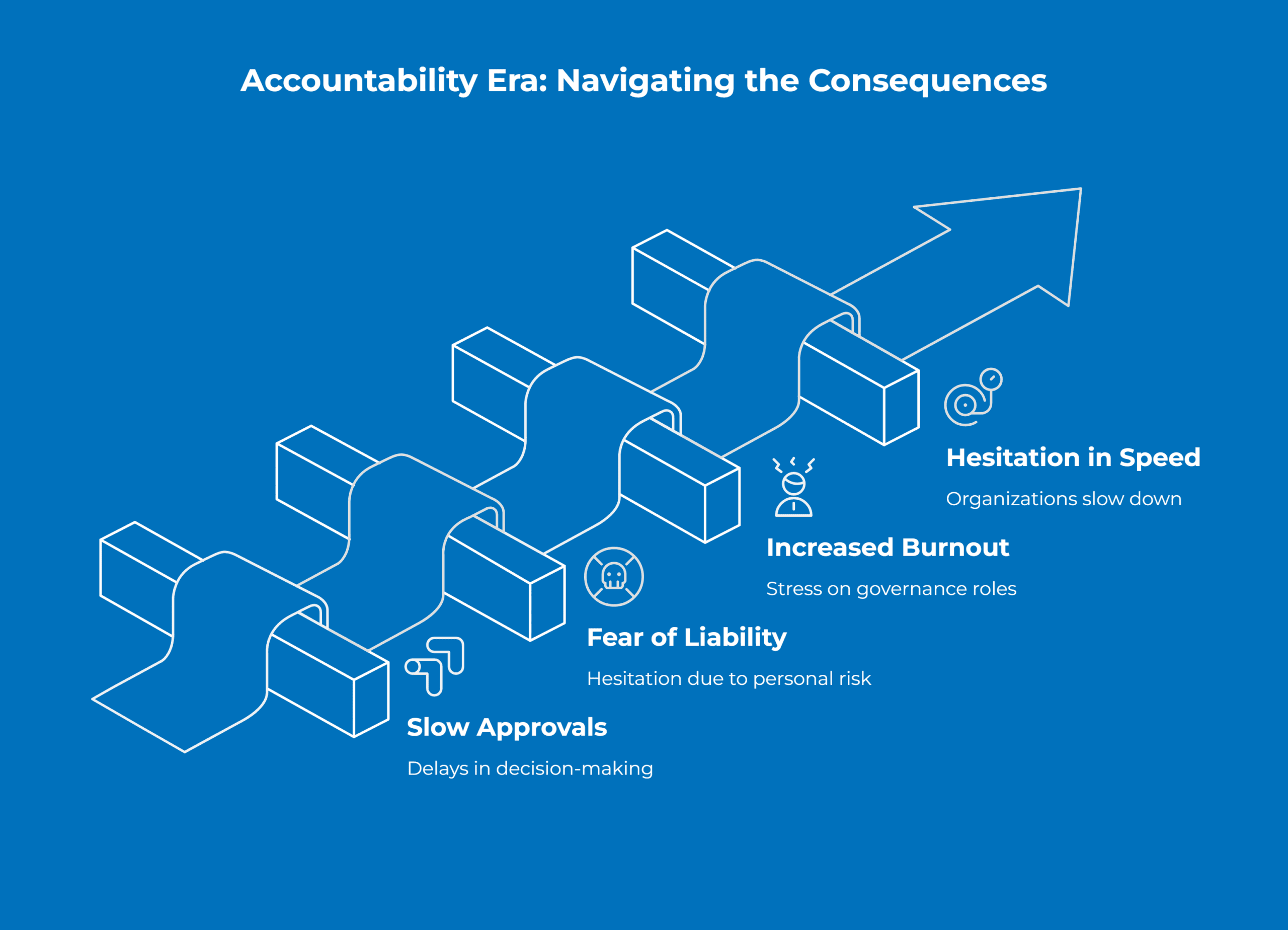

The consequences are already visible as we move into this new accountability era:

- Approvals slow down.

- Fear of personal liability grows.

- Burnout increases in governance and compliance roles.

- Organisations hesitate when speed is needed.

When complexity overwhelms people, accountability becomes distorted. Responsibility shifts downward to individuals who lack the tools, visibility or authority to carry it safely.

You have a clear choice here. Governance can be designed to expose people to risk. Or it can be designed to protect them. The most resilient organisations build accountability into everyday work, so people are supported by the system instead of left to carry the weight alone.

This is not a soft leadership issue. It directly shapes performance, trust and organisational stability.

Conclusion: Designing Accountability for What Comes Next

The UBS case isn’t just another compliance headline to be archived and forgotten. It’s a warning signal. For years, organisations have acted as buffers between individual decision-makers and public accountability. That buffer is thinning. Between the tightening pressure of NIS2 and the growing opacity of AI-driven logic, the old defence of “system failure” is losing credibility.

In 2026, regulators and the public are looking past the logo and toward the person holding the pen (or the prompt). If you design these systems, you carry responsibility for their outcomes. We are moving away from a world of box-ticking and into a world of ownership.

The real question for the C-suite today is not whether your AI is technically compliant. It’s whether you’re prepared to stand behind a decision you didn’t make personally, but for which you are now held legally and publicly responsible. Accountability can no longer be delegated to a department. It is a leadership position.

Im Jahr 2026 blicken Regulierungsbehörden und die Öffentlichkeit hinter das Logo und auf die Person, die den Stift (oder die Eingabeaufforderung) in der Hand hält. Wenn Sie diese Systeme entwerfen, tragen Sie die Verantwortung für deren Ergebnisse. Wir verlassen die Welt des blossen Abhakens von Checklisten und gestalten eine Zukunft, in der Eigenverantwortung im Zentrum steht.